GlusterFS is an open source distributed file system which provides easy replication over multiple storage nodes. These nodes are then combined into storage volumes which you can easily mount using fstab in Ubuntu/ Debian and Red Hat/ CentOS. To see how to set up a GlusterFS volume, see this blog post.

GlusterFS is an open source distributed file system which provides easy replication over multiple storage nodes. These nodes are then combined into storage volumes which you can easily mount using fstab in Ubuntu/ Debian and Red Hat/ CentOS. To see how to set up a GlusterFS volume, see this blog post.

Before we can mount the volume, we need to install the GlusterFS client. In Ubuntu we can simply apt-get the required package, or yum in Red Hat/ CentOS. For Ubuntu/ Debian:

apt-get install glusterfs-client

For Red Hat, OEL and CentOS:

yum install glusterfs-client

Once the install is complete, open the fstab and add a new line pointing to your server. The server used here is the server which contains the information on where to get the volume, and not necessarily where the data is. The client will connect to the server holding the data. The following steps are the same on both Debian and Red Hat based Linux distributions.

Easy way to mount

vi /etc/fstab

Replace [HOST] with your GlusterFS server, [VOLNAME] with the Gluster FS volume to mount and [MOUNT] with the location to mount the storage to.

[HOST]:/[VOLUME] /[MOUNT] glusterfs defaults,_netdev 0 0

Example:

gfs1.jamescoyle.net:/datastore /mnt/datastore glusterfs defaults,_netdev 0 0

Finally, reboot your machine to make the volume appear in df.

df -h

gfs1.jamescoyle.net:/testvol 30G 1.2G 27G 5% /mnt/volume

More redundant mount

The trouble with the above method is that there is a single point of failure. The client only has one GlusterFS server to connect to. To set up a more advanced mount, we have two options; create a volume config file, or use backupvolfile-server in the fstab mount. Remember this is not to specify where all the distributed volumes are, it’s to specify a server to query all the volume bricks.

fstab method

We can use the parameter backupvolfile-server to point to our secondary server. The below example indicates how this could be used.

gfs1.jamescoyle.net:/datastore /mnt/datastore glusterfs defaults,_netdev,backupvolfile-server=gfs2.jamescoyle.net 0 0

Using a volume config file

Create a volume config file for your GlusterFS client.

vi /etc/glusterfs/datastore.vol

Create the above file and replace [HOST1] with your GlusterFS server 1, [HOST2] with your GlusterFS server 2 and [VOLNAME] with the Gluster FS volume to mount.

volume remote1

type protocol/client

option transport-type tcp

option remote-host [HOST1]

option remote-subvolume [VOLNAME]

end-volume

volume remote2

type protocol/client

option transport-type tcp

option remote-host [HOST2]

option remote-subvolume [VOLNAME]

end-volume

volume replicate

type cluster/replicate

subvolumes remote1 remote2

end-volume

volume writebehind

type performance/write-behind

option window-size 1MB

subvolumes replicate

end-volume

volume cache

type performance/io-cache

option cache-size 512MB

subvolumes writebehind

end-volume

Example:

volume remote1

type protocol/client

option transport-type tcp

option remote-host gfs1.jamescoyle.net

option remote-subvolume /mnt/datastore

end-volume

volume remote2

type protocol/client

option transport-type tcp

option remote-host gfs2.jamescoyle.net

option remote-subvolume /mnt/datastore

end-volume

volume replicate

type cluster/replicate

subvolumes remote1 remote2

end-volume

volume writebehind

type performance/write-behind

option window-size 1MB

subvolumes replicate

end-volume

volume cache

type performance/io-cache

option cache-size 512MB

subvolumes writebehind

end-volume

Finally, edit fstab to add this config file and it’s mount point. Replace [MOUNT] with the location to mount the storage to.

/etc/glusterfs/datastore.vol [MOUNT] glusterfs rw,allow_other,default_permissions,max_read=131072 0 0

When you create a new GlusterFS Volume it is publicly available for any server on the network to read.

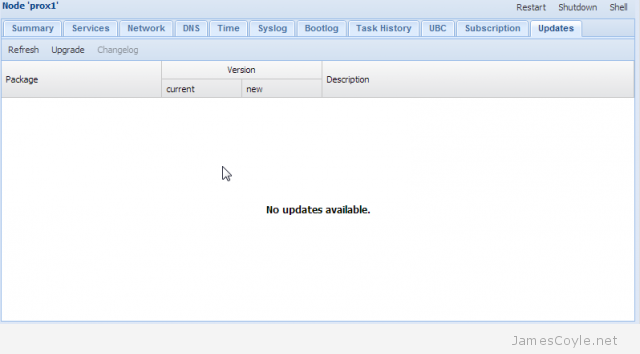

When you create a new GlusterFS Volume it is publicly available for any server on the network to read. A new release of Proxmox is now available, release 3.1.

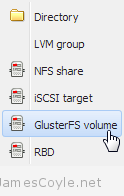

A new release of Proxmox is now available, release 3.1. This is a new storage plugin which can be used to add usable storage to your Proxmox host. GlusterFS is an open source, distributed file system with potential to house a huge capacity of data.

This is a new storage plugin which can be used to add usable storage to your Proxmox host. GlusterFS is an open source, distributed file system with potential to house a huge capacity of data.