Manually Mount a Ceph Storage Pool in Proxmox

Category : How-to

The latest BETA of Proxmox, and soon to be released 3.2 comes with the Ceph client automatically installed which makes mount Ceph storage pools painless.

The latest BETA of Proxmox, and soon to be released 3.2 comes with the Ceph client automatically installed which makes mount Ceph storage pools painless.

You can mount the Ceph storage pool using the Proxmox web GUI.

You may need to copy the Ceph storage pool keyring from your Ceph server to your Proxmox server. If you use authentication on your Ceph storage pool, log in to your Ceph server via SSH. Run the below scp command to copy your Ceph keyring to your Proxmox server. You will need to replace [PROXMOX_SERVER] with the host name or IP address of your Proxmox server and [STORAGE_NAME] is the name you will use for your Proxmox storage mount which will be used in the web GUI.

scp /etc/ceph/ceph.client.admin.keyring [PROXMOX_SERVER]:/etc/pve/priv/ceph/[STORAGE_NAME].keyring

For example

scp /etc/ceph/ceph.client.admin.keyring prox3.jamescoyle.net:/etc/pve/priv/ceph/ceph_storage.keyring

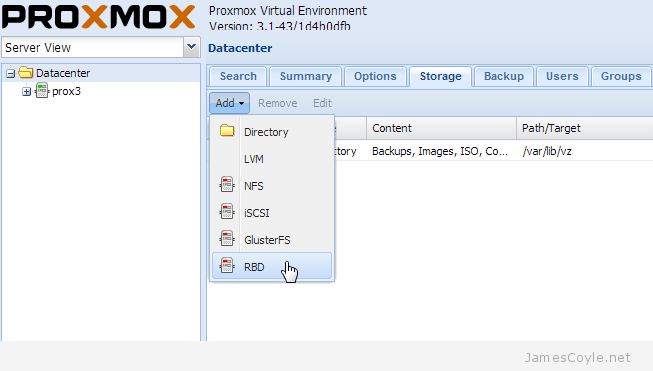

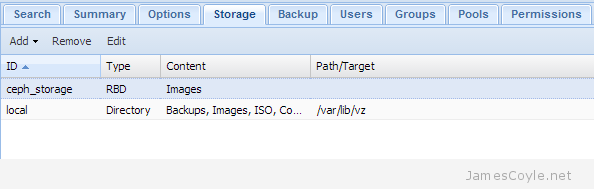

To use the Proxmox GUI to mount a Ceph storage pool, login to the web GUI and click on the Datacenter folder in the top left, then click the Storage tab, Add and finally RBD.

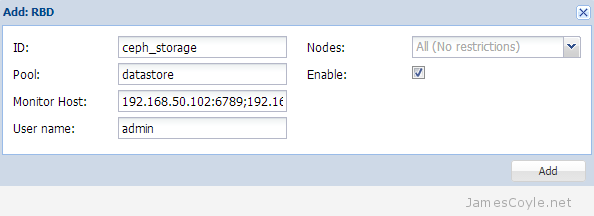

Enter the details of your Ceph storage pool in the new dialogue box which appears.

- ID: the name which this storage mount will have in Proxmox. If you use authentication on your Ceph server, you will need to use the same name as the above [STORAGE_NAME] field.

- Pool: the Ceph storage pool name.

- Monitor Host: the host or IP addresses of your Ceph monitor servers. You can enter a single host or multiple hosts separated by a semicolon [;].

- User name: the named use to use when connecting to the Ceph storage pool. If you are not using user authentication, set this to the below value.

admin (optional, default = admin)

Don’t forget to make sure that the Proxmox host can reach the remote Ceph monitor hosts – by default a Ceph monitor runs on port 6789.

Given the amount of trouble you can have getting Linux and Windows to play nicely together, you may be surprised to find out that it’s easy to mount either a Windows file share on Linux. You can also use the same method to mount a Samba share.

Given the amount of trouble you can have getting Linux and Windows to play nicely together, you may be surprised to find out that it’s easy to mount either a Windows file share on Linux. You can also use the same method to mount a Samba share. You can allocate storage to an OpenVZ container during creation, when the container is offline and even when the container is active. The main problem with this is that it is not shared, and has to be on the same storage which the container was created on.

You can allocate storage to an OpenVZ container during creation, when the container is offline and even when the container is active. The main problem with this is that it is not shared, and has to be on the same storage which the container was created on.