Fail2ban is an application that scans log files in real time and bans malicious IP addresses based on a set of rules and filters you can set.

Fail2ban is an application that scans log files in real time and bans malicious IP addresses based on a set of rules and filters you can set.

For this blog post, we’re going to look at capturing invalid login attempts to the Proxmox Web GUI and ban any IP addresses from accessing the Web GUI if they fail to authenticate 3 times from the same IP address.

Fail2ban is made up of three main component parts:

- Filter – a Filter is a pattern or regular expression that we wish to search for in the log files. In our case, we want to search for the words ‘authentication failure’ in the log because that’s what the pvedaemon writes when a failed login attempt occurs.

- Action – an Action is what we’ll do if the filter is found. What we need to do is ban any IP address where the filter is triggered 3 times.

- Jail – a Jail in Fail2ban is the glue that holds it all together – this ties a Filter, together with an Action and the relevant log file.

Install Fail2ban

Installing Fail2ban on Debian/ Proxmox is as easy as it gets – just use the apt package manager.

apt-get install fail2ban

Fail2ban is mostly Python, so it’ll need to be installed on the system or apt-get will install it as a dependency.

Note: by default Fail2ban will enable itself on SSH connections, blocking invalid IPs after 6 invalid attempts.

Configure Fail2ban for the Proxmox Web GUI

There are several steps to setting up Fail2ban. As mentioned earlier in the post, we want to ban any users IP address from accessing the Proxmox Web GUI if they have failed to authenticate 3 times. We shouldn’t block them indefinitely because it may be a simple password issue that they can resolve with the account administrator. We’ll configure Fail2ban to ban failed attempts for an hour.

Because banning a user after 3 invalid attempts is a fairly basic thing in the world of Fail2ban, we won’t need to create an Action as listed above. We’ll need to create a Jail and a Filter.

The Jail

A Jail in Fail2ban is the core configuration that combines a Filter, an Action (although this may be default Fail2ban behaviour) and a log file.

The default configuration for Fail2ban is found in /etc/fail2ban/jail.conf and contains many predefined entries for common processes such as FTP and Apache. We shouldn’t edit this file directly when adding new entries, instead, we should create the below file which will be used to override the default jail.conf.

vi /etc/fail2ban/jail.local

Add the following (this file may not already exist):

[proxmox-web-gui]

enabled = true

port = http,https,8006

filter = proxmox-web-gui

logpath = /var/log/daemon.log

maxretry = 3

bantime = 3600

The above entry has set a ruleset name of proxmox-web-gui, and the following:

- enabled – this simply states that this ruleset is active.

- port – set sthe port that any bans should act on

- filter – this sets the file name of the filter that we’ll use to detect any login failures. More about this in the next section.

- logpath – the name or pattern (for example /var/log/apache/*.log) of the log to monitor for the failed logins. This is the file that the above filter will work on.

- maxretry – this is how many times should the filter detect a problem before starting the ban.

- bantime – this is how long, in minutes, that the ban be in effect for.

The Filter

Now that we have specified the log file to look in we need to specify how to find the event we need to look for. For our example, Proxmox writes a specific string each time a failed login occurs which looks like the belew:

authentication failure; rhost=10.10.10.10 [email protected] msg=no such user ('[email protected]')

Our Filter, therefore, needs to look for this text and pull out the IP address.

Create a Filter file called proxmox-web-gui.conf in /etc/fail2ban/filter.d/.

vi /etc/fail2ban/filter.d/proxmox-web-gui.conf

Add the following:

[Definition]

failregex = pvedaemon\[[0-9]+\]: authentication failure; rhost=<HOST> user=.* msg=.*

This will match the text that Proxmox writes to the daemon.log file when a failed login is detected. It’s got a Fail2ban specific keyword <HOST> which is what’s used to indicate to Fail2ban where the offending IP address is in the log entry. Fail2ban can then block this IP address as indicated in our Jail file.

Testing Fail2ban Filters

Fail2ban provides a nice little utility to test your Filter definitions to make sure they are working as you intend. First things first – we need an entry in our log file for an invalid login attempt. Go to your Proxmox Web GUI and enter some invalid login credentials.

The command to use is fail2ban-regex which has two parameters; the log file location and the Filter location.

fail2ban-regex /var/log/daemon.log /etc/fail2ban/filter.d/proxmox-web-gui.conf

An example of the output is below. The text Success, the total number of match is 1 states that there is one match in the log for our pattern in the proxmox-web-gui.conf file.

fail2ban-regex /var/log/daemon.log /etc/fail2ban/filter.d/proxmox-web-gui.conf

Running tests

=============

Use regex file : /etc/fail2ban/filter.d/proxmox-web-gui.conf

Use log file : /var/log/daemon.log

Results

=======

Failregex

|- Regular expressions:

| [1] pvedaemon\[[0-9]+\]: authentication failure; rhost=<HOST> user=.* msg=.*

|

`- Number of matches:

[1] 1 match(es)

Ignoreregex

|- Regular expressions:

|

`- Number of matches:

Summary

=======

Addresses found:

[1]

10.27.4.98 (Fri May 29 12:31:14 2015)

Date template hits:

770 hit(s): MONTH Day Hour:Minute:Second

0 hit(s): WEEKDAY MONTH Day Hour:Minute:Second Year

0 hit(s): WEEKDAY MONTH Day Hour:Minute:Second

0 hit(s): Year/Month/Day Hour:Minute:Second

0 hit(s): Day/Month/Year Hour:Minute:Second

0 hit(s): Day/Month/Year Hour:Minute:Second

0 hit(s): Day/MONTH/Year:Hour:Minute:Second

0 hit(s): Month/Day/Year:Hour:Minute:Second

0 hit(s): Year-Month-Day Hour:Minute:Second

0 hit(s): Year.Month.Day Hour:Minute:Second

0 hit(s): Day-MONTH-Year Hour:Minute:Second[.Millisecond]

0 hit(s): Day-Month-Year Hour:Minute:Second

0 hit(s): TAI64N

0 hit(s): Epoch

0 hit(s): ISO 8601

0 hit(s): Hour:Minute:Second

0 hit(s): <Month/Day/Year@Hour:Minute:Second>

Success, the total number of match is 1

However, look at the above section 'Running tests' which could contain important

information.

Restart fail2ban for the new Jail to be loaded.

service fail2ban restart

To check your new Jail has been loaded, run the following command and look for the proxmox-web-gui Jail name next to Jail List.

fail2ban-client -v status

INFO Using socket file /var/run/fail2ban/fail2ban.sock

Status

|- Number of jail: 2

`- Jail list: ssh, proxmox-web-gui

Try to log into the Proxmox Web GUI with an incorrect user 3 and see your IP address appear in the Currently banned section.

fail2ban-client -v status proxmox-web-gui

INFO Using socket file /var/run/fail2ban/fail2ban.sock

Status for the jail: proxmox-web-gui

|- filter

| |- File list: /var/log/daemon.log

| |- Currently failed: 0

| `- Total failed: 3

`- action

|- Currently banned: 1

| `- IP list: 10.10.10.10

`- Total banned: 1

Proxmox has today released a new version of Proxmox VE, Proxmox 3.2 which is available as either a downloadable ISO or from the Proxmox repository.

Proxmox has today released a new version of Proxmox VE, Proxmox 3.2 which is available as either a downloadable ISO or from the Proxmox repository.

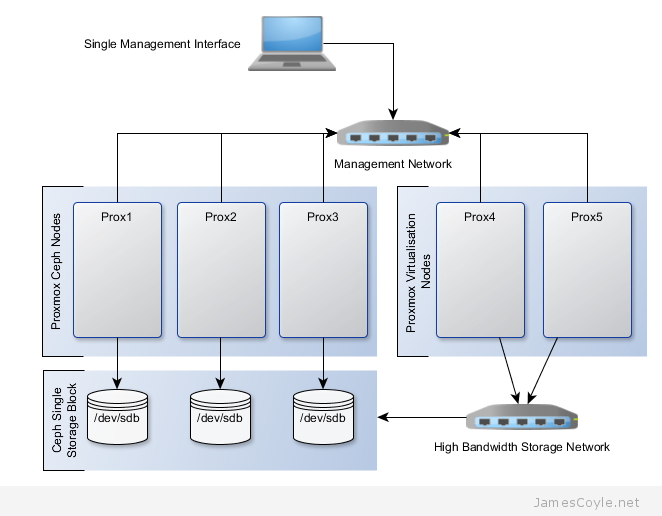

Ceph is an open source storage platform which is designed for modern storage needs. Ceph is scalable to the exabyte level and designed to have no single points of failure making it ideal for applications which require highly available flexible storage.

Ceph is an open source storage platform which is designed for modern storage needs. Ceph is scalable to the exabyte level and designed to have no single points of failure making it ideal for applications which require highly available flexible storage.

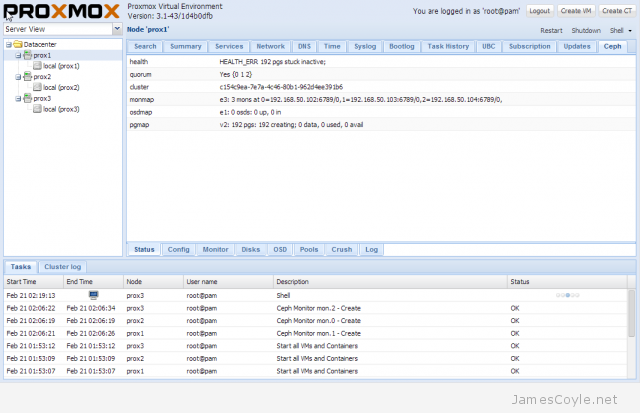

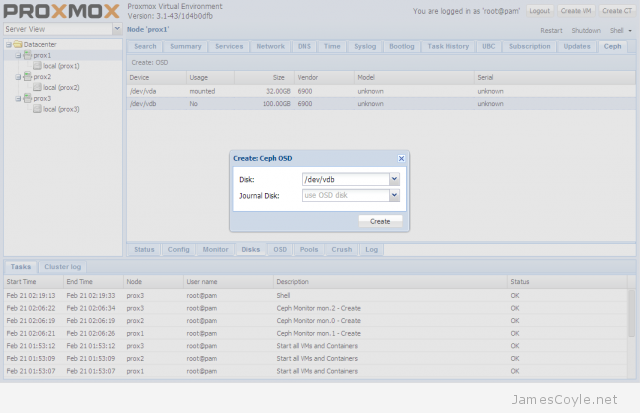

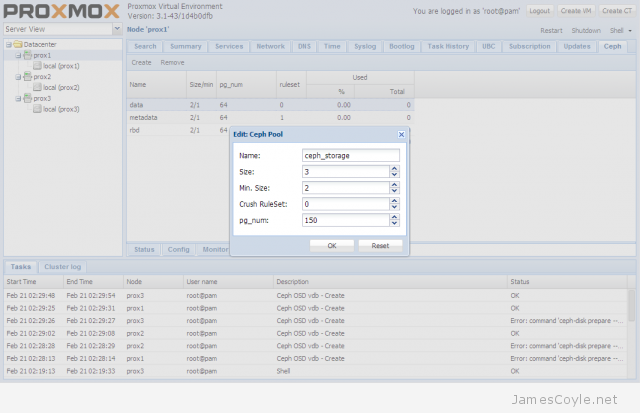

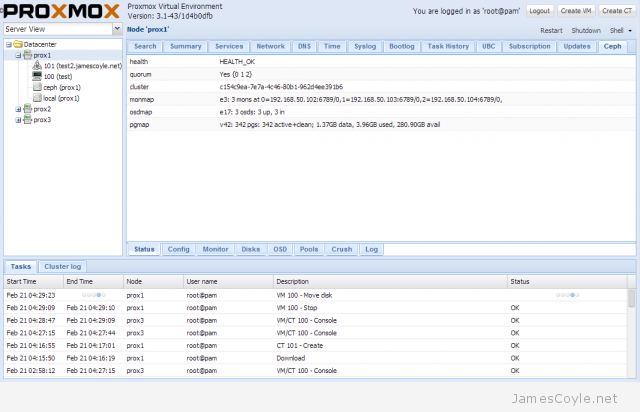

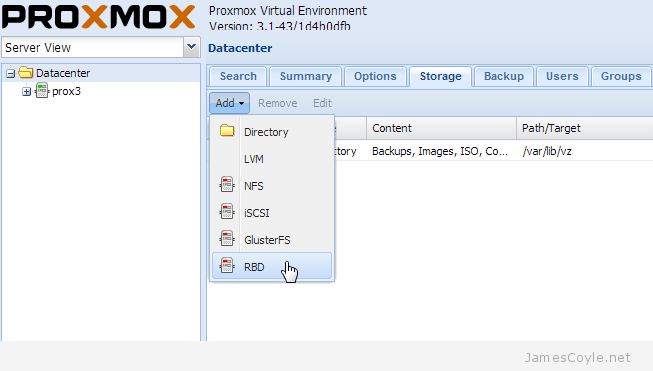

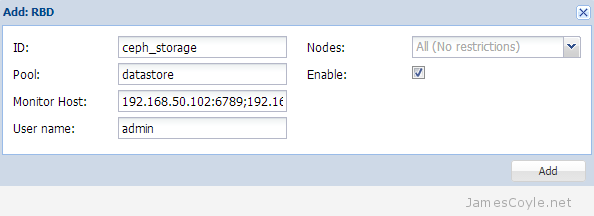

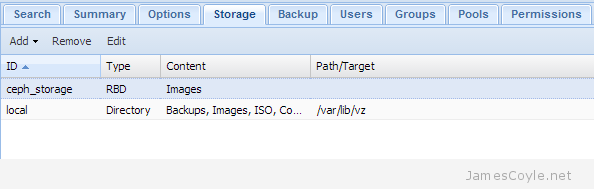

The rest of this tutorial will assume that you have three nodes which are all clustered into a single Proxmox cluster. I will refer to three host names which are all resolvable via my LAN DNS server; prox1, prox2 and prox3 which are all on the jamescoyle.net domain. The image to the left is what is displayed in the Proxmox web GUI and details all three nodes in a single Proxmox cluster. Each of these nodes has two disks configured; one which Proxmox is installed onto and provides a small ‘local’ storage device which is displayed in the image to the left and one which is going to be used for the Ceph storage. The below output shows the storage available, which is exactly the same on each host. /dev/vda is the root partition containing the Proxmox install and /dev/vdb is an untouched partition which will be used for Ceph.

The rest of this tutorial will assume that you have three nodes which are all clustered into a single Proxmox cluster. I will refer to three host names which are all resolvable via my LAN DNS server; prox1, prox2 and prox3 which are all on the jamescoyle.net domain. The image to the left is what is displayed in the Proxmox web GUI and details all three nodes in a single Proxmox cluster. Each of these nodes has two disks configured; one which Proxmox is installed onto and provides a small ‘local’ storage device which is displayed in the image to the left and one which is going to be used for the Ceph storage. The below output shows the storage available, which is exactly the same on each host. /dev/vda is the root partition containing the Proxmox install and /dev/vdb is an untouched partition which will be used for Ceph.

Fail2ban is an application that scans log files in real time and bans malicious IP addresses based on a set of rules and filters you can set.

Fail2ban is an application that scans log files in real time and bans malicious IP addresses based on a set of rules and filters you can set.